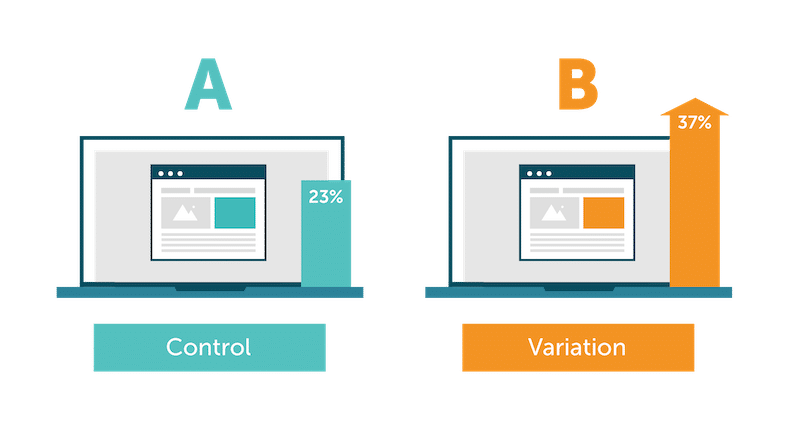

To be clear, A/B testing, also known as split testing, is a strong technique used in the email marketing to determine which version of an email campaign performs best among the target audience. This technique involves sending two or more variations of an email to a sample group of subscribers and analyzing the results to determine which version leads to greater engagement, conversions and revenue.

Where you can use A/B Testing

A/B testing can be used in various types of email marketing campaigns, such as:

- Newsletter campaigns: A/B testing can help optimise newsletter campaigns by identifying which subject lines, content, design and calls to action (CTAs) lead to more opens, clicks and conversions.

- Pop-up windows: Pop-ups are effective tools for capturing potential customers and increasing conversions, but they can also be annoying if not properly designed and targeted. A/B testing can help determine the best timing, design, messaging and targeting for pop-ups to maximize their effectiveness.

- Automation: Automated email campaigns, such as welcome emails, abandoned cart emails and post-purchase emails, can benefit from A/B testing to determine the best actions, content and CTAs for greater customer loyalty and sales.

Common mistakes when doing A/B testing

Here are some common mistakes to avoid when conducting A/B testing in email marketing:

- Testing too many variables: Testing too many variables at once can make it difficult to determine which changes led to the improved results. It is best to test one variable at a time to obtain accurate information.

- You are not trying hard enough: Testing for too short a period of time can lead to inconclusive results. It is recommended to test for at least one or two weeks to obtain statistically significant results.

- Testing on a small sample size: Testing on a small sample size may lead to inaccurate results. Testing on a sufficiently large sample size is recommended to ensure that the results are statistically significant.

A/B testing best practices

Here are some best practices for A/B testing in email marketing:

- Identify your goals: Before you conduct A/B tests, define your objectives and the metrics you want to measure. This will help you determine which variables to test and how to interpret the results.

- Try one variable at a time: Testing one variable at a time will help you isolate the impact of each change and get accurate information.

- Use a large enough sample size: To make sure your results are statistically significant, test on a large enough sample size.

- Track your results: Regularly monitor A/B test results to see which variant performs best and make data-driven decisions.

How many subscribers are needed for a trial?

Ο number of subscribers required for definitive results in A/B testing depends on several factors, such as the size of the differences you expect to see, the level of statistical significance you want to achieve, and the amount of variability in your data.

General, the larger your sample size, the more statistically significant your results will be. However, there is no single answer to how many subscribers are needed for definitive results, as it may vary based on the above factors.

As a general empirical rule of thumb, it is recommended that you test on a sample size that is at least large enough to to achieve statistical power 80%, which means that you have a 80% chance of detecting a statistically significant difference if there is one.

With the help of an algorithm we can calculate exactly the statistical difference and lead you to a safe conclusion.

A/B testing case studies

Here are some real-life examples of businesses that used A/B testing in email marketing and the results they achieved:

- Airbnb: Airbnb used A/B testing to determine which email subject lines would lead to more bookings. By testing different subject lines, they were able to increase their open rates by 2.6%.

- Hubspot: Hubspot used A/B testing to determine which CTA button color would drive more clicks to their email campaigns. By testing different colors, they were able to increase click-through rates to number of impressions by 21%.

- Grammarly: Grammarly used A/B testing to optimize its welcome email campaign. By testing different subject lines and content, they were able to increase open rates by 10% and click-to-view rates by 46%.

Conclusion

In conclusion, the A/B test is a powerful tool in email marketing that can help optimize your campaigns and help to increase loyalty and revenue. By following best practices and avoiding common mistakes, businesses can get accurate information and make data-driven decisions to improve their email marketing performance.

If all of the above impressed you, ask us for a free email marketing consultation to show you how we help online businesses increase their revenue. Some examples you may have already seen in case studies Just book your seat with us.